When we first launched, every phone call was scored manually. It was painstaking, human work — listening, evaluating, annotating. But it was worth it. Those early days gave us the clearest understanding of what made a call great, what led to success, and what the best teams consistently did differently.

But as we grew, so did the volume. Manually scoring calls just didn’t scale. Accuracy began to decrease over time, resulting in incorrect recruiter names and scores on phone calls. More importantly, it wasn’t fast enough to give users the real-time insights they needed to coach effectively, spot problems early, and celebrate wins when they happened — not weeks later.

I began to feel immense pressure from our Sales and Leadership teams.

"It has to be more accurate."

"The recruiter names have to be right, or clients will invalidate your entire tool."

ChatGPT had recently emerged, and I started to wonder if we had an opportunity to replace our manual effort with new AI technologies.

For about 6 weeks, I committed myself to discovery. I simulated test after test and phone call after phone call, building fake scorecards, asking questions to ChatGPT, and manually listening to calls to determine if the AI was answering correctly. This work felt unproductive, but I knew that I to ruthlessly prioritize it for the betterment of the product I was building.

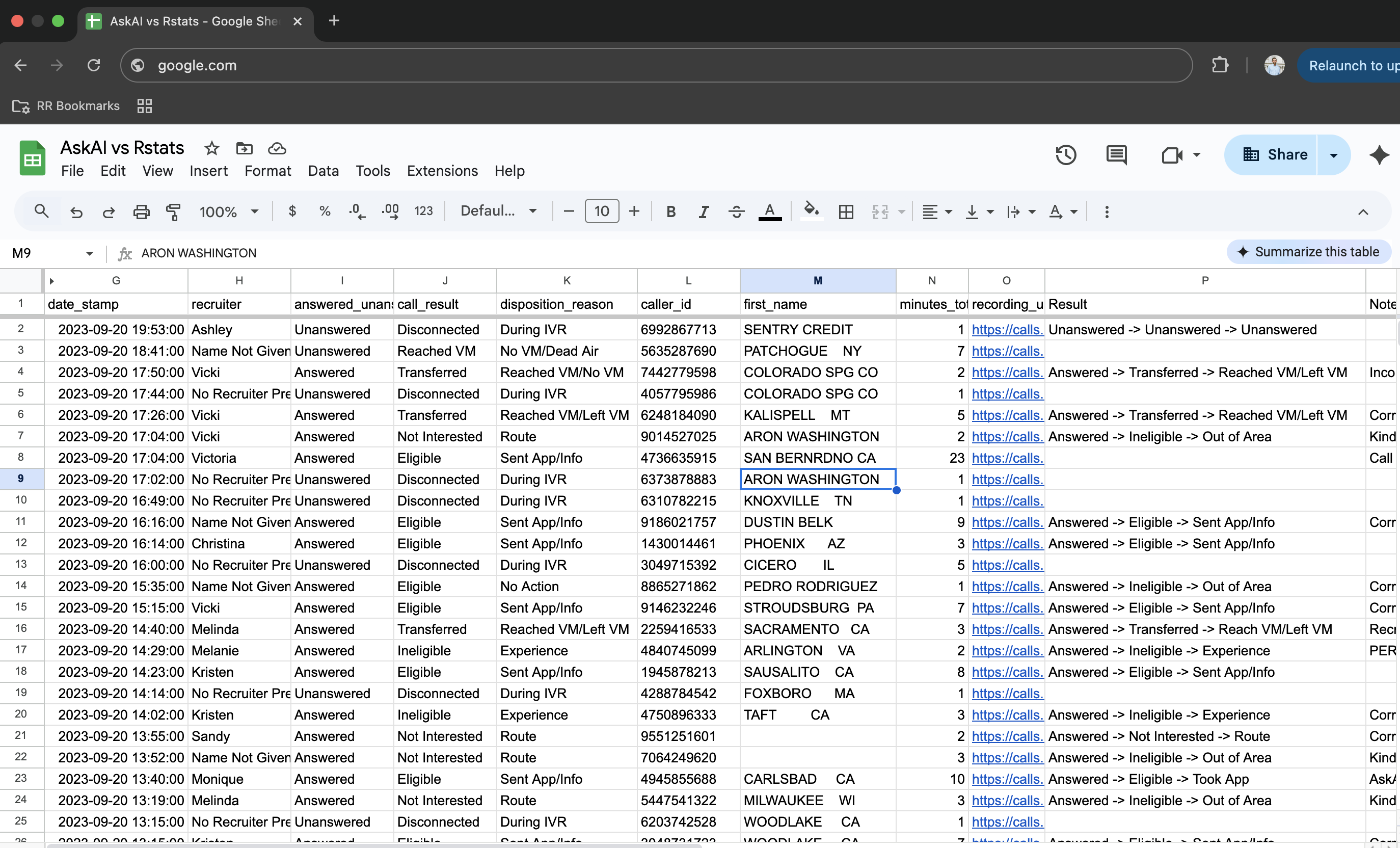

This Google Sheet contains hundreds of calls I scored manually to compare to the AI.

The Decision

At the end of the 6 weeks, I knew this was the direction the product had to go. It became clear that AI is the future of this tool and many tools on the market. The days of humans doing simple, manual work are going away.

After a few discussions with our CEO & CTO as well as our call analysis team, we set off on the AI journey.

To say that it was challenging would be a gross understatement. After all, the data powering this product had been in the making for 7 years prior to me, but a product had never been built to actually leverage the data.

The Grind

What followed was six months of nonstop iteration. Every week, we held standing meetings with engineering, data, and product to dissect what was working — and what absolutely wasn’t. One week we were experimenting with machine learning classifiers to predict recruiter identities, the next we were refining prompt engineering in ChatGPT just to get a consistent summary of a call.

It was like trying to build a rocket ship while it was already in the air. Human scoring was continuing in parallel, but it was getting worse by the week — we were burning out the team. Accuracy was slipping, clients were noticing, and the pressure was mounting from every side: Sales, Customer Success, Leadership.

"We need to launch something soon, even if it's not perfect."

The turning point came not when we had nailed the AI, but when we realized we never would — not in a vacuum. After half a year of testing in isolated environments, we made the call: we were going to push this thing over the line.

It wasn’t perfect. Far from it. But we knew the only way to *really* improve was to get real data, from real users, in production. It was the hardest decision I’ve made on this product so far — launching something unfinished — but it was the only path forward.

And those first few weeks? Brutal.

I was taking it in the teeth from every direction. Users were frustrated. Sales was questioning the decision. Leadership was asking hard questions. I had told everyone this move would increase accuracy and speed — and instead, it got worse. Scorecards were off. Recruiter names were still wrong. There were moments where it felt like we’d made a huge mistake.

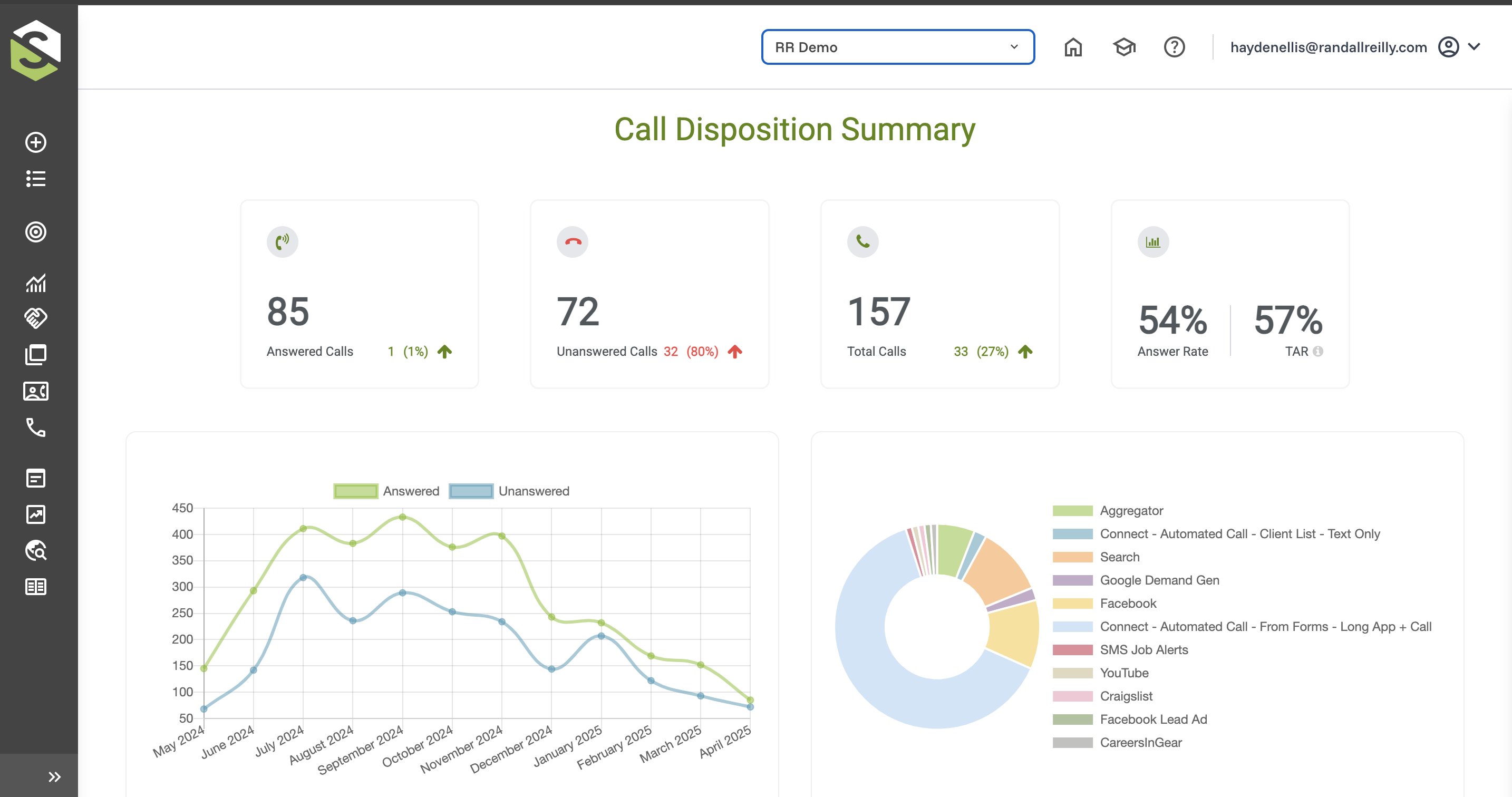

Screenshot of the product.

But I kept reminding myself — and everyone else — that this was part of the plan. I had said from the beginning: it’s going to get worse before it gets better. That didn’t make it easier, but it gave us conviction. We were finally in a position to learn from real usage, not just simulated tests.

That moment marked the beginning of true learning. The instant we launched, the feedback loop got tighter. We could finally see how the AI performed at scale, where it broke, and where it shined. We could move faster. Iterate weekly. Improve daily.

The Outcome

Since launch, our scoring accuracy has skyrocketed. Recruiter names are correctly identified more than 90% of the time — up from an unreliable 60%. Insights are delivered in near real-time. Managers are coaching better. Teams are improving faster.

Most importantly, our users trust the product more — because it works. And when it doesn’t, we know about it immediately and fix it fast.

This journey wasn’t about chasing hype or blindly adding AI. It was about solving a problem our users were feeling deeply. They needed better insights, delivered faster. And now, they have them.

We’re still just getting started. But one thing’s for sure — the future of this product is AI-powered. And it’s going to keep getting smarter.